Everyone agrees on the importance of quality assurance (QA), especially in breast screening. But formal audits are time-consuming and infrequent, and often don't address individual performance. A group of Dutch researchers has addressed the issue with a self-test specifically designed to let breast imagers know how they are doing.

In a test drive of the system, the researchers found that it performed well in delivering feedback to a group of radiologists who had their performance for interpreting screening mammograms measured against a reference standard set by an expert panel. However, there is room for improvement, particularly if the program is to be used formally as part of a Dutch national QA program, according to a study published online on 22 September in European Radiology.

The Dutch National Expert and Training Center for Breast Cancer Screening (NETCB) is responsible for ensuring and improving the clinical and technical quality of the country's breast cancer screening program. This is usually achieved through audits; however, such reviews take a lot of time and do not provide information on performance at an individual level. As a supplement to audits, several other countries have developed screening test sets.

"In the U.K. and Australia, for instance, the self-test is widely accepted as a quality improvement tool," wrote Janine Timmers, PhD, from NETCB and the department for health evidence at Radboud University Medical in Nijmegen, the Netherlands, and colleagues. "It presents participants with clear performance parameters and also provides immediate, individual feedback on missed lesions and false-positive selections. Readers are also able to compare performance against regional or (inter)national benchmarks."

Currently, Dutch screening radiologists organize their feedback locally and only receive feedback from the NETCB during audits held every three years -- and the feedback is only per team.

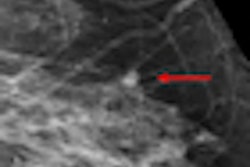

An expert panel of three radiologists with more than 10 years of experience developed the test set, which consisted of 60 screening mammograms (20 malignancies) from women participating in the Utrecht screening program. Each screening examination contained digital mammograms and consisted of two views for each breast, including prior biennial mammograms if available.

To increase difficulty, 10 nonmalignant cases with subtle signs or dense breasts were included in the test set. The reference standard for the test was set by an expert panel of three radiologists who read the mammograms in consensus. The test set was then entered into Ziltron, a commercially available software application for QA, self-testing, and education.

Next, a total of 144 radiologists were invited to analyze the cases in the Ziltron database using their regular diagnostic workstations. Radiologists were aware the set was enriched, but were not aware of the total number of breast cancers.

Participants analyzed the screening cases for findings such as location, lesion type, and BI-RADS. Timmers and team then analyzed their performance, looking at areas under the receiver operating characteristics (ROC) curves (AUC), case and lesion sensitivity and specificity, agreement (kappa), and correlation between reader characteristics and case sensitivity (Spearman correlation coefficients).

A total of 110 radiologists completed the test. Median AUC value was 0.93, case and lesion sensitivity was 91%, and case specificity 94%. There was substantial agreement for recall (κ = 0.77) and laterality (κ = 0.80). There was moderate agreement for lesion type (κ = 0.57) and BI-RADS (κ = 0.45). There was no correlation between case sensitivity and reader characteristics.

"We intend to make the self-test obligatory for all screening radiologists in order to fulfill their registration requirements," Timmers and colleagues wrote. "We do not expect to set a minimal acceptable threshold in the near future because we do not know how test-set performance correlates with screening performance."

This is especially true in the Netherlands, which has a low recall rate of 20 per 1,000 screened women, the researchers wrote.

"A realistic set would have to contain thousands of cases to allow sufficient data to be collected for statistical analyses," they added.

Also, the test set has oversimplified answers and an artificial environment.

However, the analysis is especially useful because it can be used to advise individual screening radiologists on areas of improvement. In some cases, the expert panel assigned a mammogram as BI-RADS 1 or 2, but several readers assigned the case BI-RADS 5.

"When reviewing these cases retrospectively, the expert panel did not recall these cases because of the benign appearance of mammographic findings," the researchers noted. "However, our expert panel could understand the choice for recall if these screens were read by inexperienced screening radiologists."

Several cases with clear cancer signs (BI-RADS 5) were missed by some readers and should have been recalled; these are examples of cases that could be helpful in designing an individual training course, which the NETCB is working on.

The researchers offered participants the opportunity to evaluate their own performance and determine individual areas of improvement. During a feedback conference, results from the whole group were presented. The first aggregated results show the continuing medical education training program should primarily be targeted at reducing interobserver variation in the interpretation and description of abnormalities.

"Future research needs to be performed to monitor whether performance of screening radiologists will improve," the researchers concluded.