VIENNA -- The EU AI Act is already partially in effect, and hospitals and vendors have until August 2026 to comply, experts told ECR 2025 attendees at a state-of-the-art symposium on Wednesday.

"The AI Act is already here, it's already being deployed," stressed radiologist Dr. Hugh Harvey, managing director of Hardian Health, Haywards Heath, U.K. "With the legislation, transparency will ensure 'regulatory hide and seek' is no longer possible. All AI vendors must provide publicly available intended use and performance data."

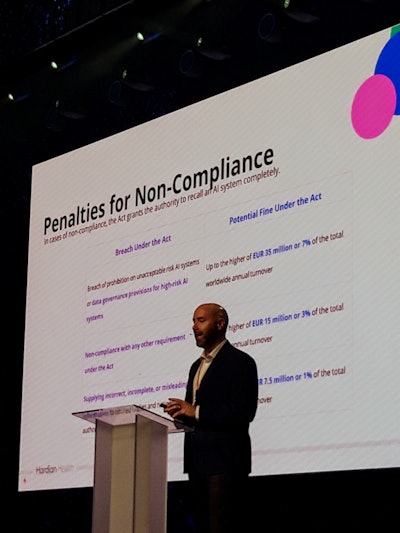

The AI Act is increasing transparency and eliminating regulatory "hide and seek," said Dr. Hugh Harvey. All pictures courtesy of Claudia Tschabuschnig.

The AI Act is increasing transparency and eliminating regulatory "hide and seek," said Dr. Hugh Harvey. All pictures courtesy of Claudia Tschabuschnig.

While many AI tools already exist, the act introduces stricter regulatory requirements and huge potential fines for noncompliance, creating immense pressure on healthcare providers and vendors. Some regulations, including bans on unacceptable risk AI systems, are already being enforced.

As AI in radiology is classified as high-risk, it falls under strict conformity assessment (which is already required under CE marking, but with stricter oversight) and postmarket surveillance, including continuous monitoring and reporting of adverse events, according to Harvey. Every radiology department, radiologist, and radiographer deploying AI is legally considered a deployer of a high-risk AI system -- and therefore carries specific obligations.

Risk management systems must be in place to assess AI reliability and prevent harm, and postmarketing surveillance is required to continuously monitor AI performance, preventing bias or degradation over time, he continued. Transparency requirements mandate clear documentation on how AI is used and its limitations, and human oversight is mandatory, particularly for autonomous AI systems involved in critical decision-making.

The ESR has outlined key recommendations to help hospitals and developers navigate the new AI landscape, and AI education must be integrated at all levels, from radiology and radiographer curricula to hospital-level AI training programs. Even patients must be educated on AI use. "Education is paramount," noted Harvey. Also, AI deployment must be documented, including both how to use AI and how not to use it.

By August 2026, AI "regulatory sandboxes" are legally required in every European Union (EU) country, yet none have been set up so far. These sandboxes allow AI systems to be tested and validated in controlled environments before market release. Harvey stressed that these environments are still missing, leaving a crucial gap in AI regulation. The AI Act enforces strict penalties for violations, with fines of up to €35 million or 7% of annual turnover. However, enforcement mechanisms will not be in place until August 2026, meaning penalties cannot yet be imposed.

The AI Act has sparked political tensions, and some major tech companies are pulling back from Europe due to its regulatory uncertainty. "Our regulation has prevented our population from coming to potential harm, whereas the developers claim it stifles innovation. There's a geopolitical battle between America and Europe on AI regulation," Harvey pointed out.

Mind the Gap

In the same session, Dr. Ritse Mann, PhD, a breast and interventional radiologist at the Radboud University Medical Center in Nijmegen and the Netherlands Cancer Institute, emphasized the need to take a step back and reassess AI's true efficiency in healthcare.

AI must prove its real-world impact beyond technical accuracy to truly benefit patients, stated Dr. Ritse Mann, PhD.

AI must prove its real-world impact beyond technical accuracy to truly benefit patients, stated Dr. Ritse Mann, PhD.

Currently, most AI studies focus on technical performance and diagnostic accuracy, but they do not prove whether AI actually improves patient outcomes, leaving a gap between promising AI capabilities and real-world clinical impact.

"Most of what we currently see published in the big journals doesn't go beyond the development effects," Mann said, adding that many AI studies stop at reader studies (AI vs. radiologists in controlled tests) and don't assess real-world impact. "The 'laboratory effect' shows that AI tools often perform better in studies than in real hospital environments."

To ensure real-world effectiveness, post-marketing surveillance is critical but remains underdeveloped. Reinforcement of these clauses should begin this month, he added.