Radiographers are on shaky ground when it comes to explaining to patients about decisions aided by artificial intelligence (AI) algorithms, say U.K. researchers. They suggest the issue could be holding back wider use of the technology in an open-access article published on 1 July in Radiography.

Radiographers were asked in a national survey if they trusted AI algorithms to help alleviate the current backlog of unreported images in the U.K. A majority said yes, but when asked how confident they would be explaining AI decisions to others, responses were tepid, researchers found.

"Confidence does not equate to competence and therefore education of the workforce and increased transparency of the systems are suggested," wrote corresponding author Clare Rainey, a diagnostic radiography lecturer and PhD researcher at Ulster University in Newtownabbey, Northern Ireland.

The U.K.'s National Health Service (NHS) is under significant pressure due to a national shortage of radiographers, and artificial intelligence (AI) support in reporting could help minimize the backlog of unreported images, according to the authors. In this study, the researchers explored the perceptions of reporting radiographers regarding AI and aimed to identify factors that affect their trust in these systems.

Rainey and colleagues distributed a survey open to all U.K. radiographers through social media (LinkedIn and Twitter) and made it available from February to April 2021. They received 411 full survey responses and focused their analysis on 86 respondents who indicated that image reporting was a part of their role.

When asked if they understood how an AI algorithm makes its decisions, 62% (n = 53) of reporting radiographers selected "agree" options indicating they were confident on the issue, according to the results.

On the other hand, 59.3% of respondents (n = 51) disagreed that they would be confident in explaining AI decisions to other healthcare practitioners. Similarly, only 29.1% agreed that they would be confident explaining AI decisions to patients and carers (n = 25).

The respondents were also asked to indicate their trust in AI for diagnostic image interpretation decision support on a 0 to 10 scale, with results indicating a mean score of 5.28 and median of 5.

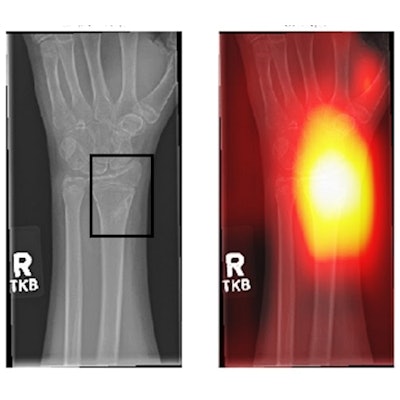

Finally, the respondents were asked which features of an AI system may offer assurance of trustworthiness. The most popular choices were "indication of the overall performance of the system" (n = 76), and "visual explanation" (n = 67), the authors wrote.

That radiographers are confident in understanding how AI systems reach decisions but less confident in explaining the process to others belies a hurdle in implementing the technology, the authors wrote. Ultimately, only 10.5% (n = 9) of reporting radiographers were currently using AI as part of their reporting at all, the authors found.

"Developers should engage with clinicians to ensure they have the information they need to allow for appropriate trust to be built. Awareness of how clinicians interact with an AI system may promote responsible use of clinically useful AI in the future," Rainey et al concluded.

UKIO 2022 presentation

The findings of this survey were also presented at the UK Imaging & Oncology (UKIO) Congress, held in Liverpool from 4 to 6 July.

"This research shows we need to educate radiographers so that they can be sure of diagnosis and know how to discuss the role of AI in radiology with patients and other healthcare practitioners," Rainey said in a statement from the UKIO.

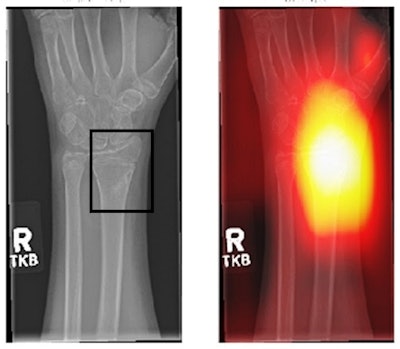

(Left) Area with fracture (in the box). This may not be easily picked up by an inexperienced radiographer. (Right) An AI-generated heatmap, directing the radiographer to check the area. Figure courtesy of Clare Rainey and MURA dataset.

(Left) Area with fracture (in the box). This may not be easily picked up by an inexperienced radiographer. (Right) An AI-generated heatmap, directing the radiographer to check the area. Figure courtesy of Clare Rainey and MURA dataset.The survey highlights issues with the perceptions of reporting radiographers in the U.K. regarding the use of AI for image interpretation, according to Rainey.

"There is no doubt that the introduction of AI represents a real step forward, but this shows we need resources to go into radiography education to ensure that we can make the best use of this technology," she said. "Patients need to have confidence in how the radiologist or radiographer arrives at an opinion."

The study was presented on behalf of Rainey by co-author Nick Woznitza, PhD.