U.K. researchers have provided fresh evidence about the feasibility of transfer learning from pretrained convolutional neural networks (CNNs). They demonstrated the accuracy of the technology to predict fractures can be increased by removing surplus imaging data and reducing the opportunity for overfitting.

At ECR 2018 in Vienna, they described a technique in which fracture detection can be semiautomated via template matching. The process was successful in the majority of cases. More sophisticated methods may well improve the accuracy of this process, for example, by using CNNs for anatomical localization.

"The process (of automated fracture detection) could be applied to a large number of medical imaging tasks and has the potential to improve workflow and reduce diagnostic delay," noted lead presenter Dr. Mark Thurston, a radiology registrar of the Peninsula training scheme in Plymouth, and colleagues.

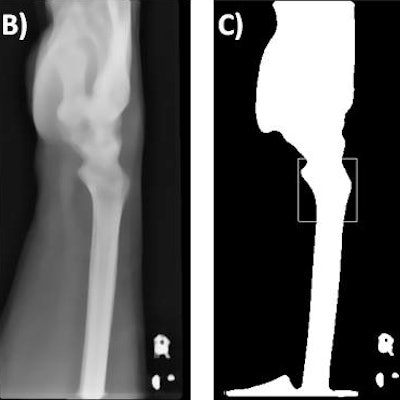

Lateral radiographs demonstrate a fracture (left) and no fracture (right). Notice how much of the image is surplus to the diagnosis. All images courtesy of Dr. Dan Kim, Royal Devon & Exeter Hospital, U.K.

Lateral radiographs demonstrate a fracture (left) and no fracture (right). Notice how much of the image is surplus to the diagnosis. All images courtesy of Dr. Dan Kim, Royal Devon & Exeter Hospital, U.K.CNNs are a machine-learning tool well suited to the analysis of visual imaging, and were inspired by the architecture of the human brain, particularly the visual cortex, they explained.

Using layers of increasing complexity to combine simple image features can lead to identification of specific patterns with high accuracy. These image features are learnt by the network by training it with the use of many examples of images where the desired outcome is already known -- an instance of supervised machine learning, said Thurston, acknowledging the contribution of his fellow radiology registrar Dr. Dan Kim, who led the project.

"It is possible to adapt CNNs that have already been trained to recognize everyday objects such as buildings or cars. Typically these have been trained on huge datasets resulting in highly refined feature detectors in early layers of the network," they continued. "These feature detectors can be used as the building blocks to detect a new set of images. This is called transfer learning."

Building an image-analysis neural network from scratch is extremely computationally intensive, whereas using transfer learning allows new problems to be tackled substantially faster.

More than 11k wrist x-rays

The authors carried out an extension to a previous study in which radiographs were preprocessed using a semiautomated cropping process. The aim was to improve the accuracy of the network by removing unnecessary parts of the image. These radiographs were then used to retrain the Inception v3 network using the transfer-learning process.

Template matching. The original image (A) is preprocessed using a smoothing algorithm (B), followed by binary thresholding and matching of the template (D) to the most similar region of the preprocessed image (C, white box). The final outcome is shown in (E).

They ascertained the sensitivity and specificity of this model and assessed whether the semiautomated cropping process improves diagnostic accuracy in the detection of fractures on adult wrist radiographs.

A total of 11,112 lateral wrist radiographs produced at the Royal Devon and Exeter Hospital in Exeter between 1 January 2015 and 1 January 2016 were used to retrain the Inception v3 network after an eightfold amplification of the original images. To reduce overfitting, an automated technique was used to identify the anatomical region of interest and crop the non-necessary image portions -- a process termed region of interest focusing. Given the large sample size, automation of the process was necessary for feasibility, according to Thurston and colleagues.

The region of interest was defined using the Python OpenCV matchTemplate function.

"The method takes a template image and slides it across every position in the subject image (the wrist radiograph), returning the position in which the closest match was calculated," they said. "In this study, the region of interest was the distal radius. The template was, therefore, an anatomical representation of the distal radius."

The template was produced by using a representative lateral wrist radiograph and applying a smoothing algorithm, followed by a binary threshold to segment the bone. A scaled template matching approach was adopted to account for different wrist sizes.

A similar preprocessing and an additional histogram-based normalization method was applied to all of the wrist radiographs, they added. The template matching was then used to identify the coordination for automated cropping of the original image, and this resulted in an image that focused solely on the anatomical region of interest.

The Inception v3 network was then retrained using the region-of-interest focused radiographs. The model output was designed to produce a continuous value of between 0 and 1, with higher values indicating a fracture. A set of 50 "fracture" and 50 "no fracture" radiographs were used in final testing of the model.

Measuring success

Automated region-of-interest focusing was able to crop the image appropriately in 92.4% of cases, reported Thurston, who has a special interest in musculoskeletal radiology, open learning initiatives, and open-source software. The remainder of the cases were cropped manually.

The accuracy of the model was improved when the region-of-interest focusing was applied reaching an area under the curve (AUC) of 0.978, compared with an AUC of 0.954 without region of interest focusing.

The output of the network was a continuous score from 0 to 1. By setting the cutoff for fracture diagnosis at > 0.4, the team achieved a sensitivity and specificity of 96% and 94% respectively.

To read more about the Plymouth/Exeter group's research, check out their full article in the May 2018 edition of Clinical Radiology. Also, to find out about the authors' work on AI in nuclear medicine (automated interpretation of Ioflupane-123 DaTScan for Parkinson's disease using deep learning), click here.