Beware the dangers of intuitive thinking. That's the central message of new award-winning research from the Netherlands designed to highlight why cognitive errors occur and how they can be avoided.

"Understanding cognitive biases provides important insight into how radiologists think. Recognizing cognitive biases is the first step to reduce future errors. Forewarned is forearmed!" stated Dr. Gerrit Jager, PhD, and colleagues from the department of radiology at Jeroen Bosch Hospital 's-Hertogenbosch in the Netherlands. "We should focus on increasing the visibility of diagnostic errors and setting research priorities to study potential avenues for improving diagnostic accuracy in the future, such as interventions aimed at improved cognition."

As the second leading cause of avoidable hospital deaths, diagnostic errors are a major healthcare concern that are worth much more attention than they have received, and a blame-free climate to discuss errors on a regular basis helps to provide insight on the cognitive root causes of error, they wrote in an e-poster presentation that received a cum laude award from the judges at last month's ECR 2014 in Vienna.

The authors are convinced that the process can be improved by creating a quiet working environment "with almost no distractions." To improve the performance of radiologists and others in daily practice, they provide a list of 20 practical suggestions on how to avoid pitfalls and cognitive biases (see sidebar).

It is really good to see this topic getting coverage in the radiology sphere, noted Dr. Catherine Mandel, the lead radiologist with the Radiology Events Register (RaER) and consultant radiologist at the Peter MacCallum Cancer Centre in Melbourne, Australia. She said she's a great believer in the risks of intuitive thinking, and includes this subject in lectures to radiology registrars (trainees). She recommends the work of Dr. Pat Croskerry, PhD, a professor in emergency medicine at Dalhousie University in Halifax, Nova Scotia, Canada, who has studied the impact of cognitive and affective error on clinical decision-making, specifically on diagnostic errors.

In their research, Jager and colleagues aimed to provide details about the concept of two thinking systems: unconscious routine (thinking fast) and analytical thinking (thinking slow). They sought to explain why both are prone to failed heuristics (i.e., experience-based techniques for problem-solving, learning, and discovery) and cognitive biases.

Diagnostic errors can be divided into no-fault, system-related, and cognitive errors. The latter may be due to failure of perception, lack of knowledge, and faulty reasoning, and studies in the field of cognitive psychology indicate that the reasoning skill of clinicians is imperfect and radiologists are prone to cognitive errors, according to the authors. Their e-poster focused on faulty reasoning in diagnostic radiology.

There are more than 50 known cognitive biases described in the literature, but Jager and colleagues singled out the most important ones in radiology:

Hindsight bias ("I knew it all along bias"), which refers to radiologists' tendency to overestimate their capability to make a particular diagnosis when they already know, in retrospect, the correct diagnosis. Hindsight bias leads to overconfidence in assessing diagnostic accuracy and inadequate appreciation of the original difficulty in making diagnoses.

Anchoring, which involves the tendency to latch on, or anchor, to the first bit of data and fail to consider the full spectrum of information, leading to a misdiagnosis.

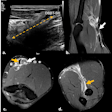

Satisfaction of search, which refers to the tendency to terminate the search for abnormalities once an abnormality has been found. It may occur when the referring physician asks for assessment of a single diagnosis, instead of an explanation of the symptoms.

Inattentional blindness, which is when a person fails to notice some stimulus that is in plain sight. This stimulus is usually unexpected but obviously visible.

Expectation bias, attribution bias, and first impression, which occur when we ascribe characteristics to a person simply because that person belongs to a certain class.

Confirmation bias, which leads physicians to collect and interpret information in a way that confirms the initial diagnosis. Physicians have been shown to actively seek out and assign more weight to the evidence that confirms their hypothesis, and ignore or underweight evidence that could disconfirm their hypothesis.

Alliterative error bias, which occurs when previous reports are clouding the judgment of the current interpretation. If one radiologist attaches the wrong significance to an abnormality that is easily perceived, the chance that a subsequent radiologist will repeat the same error is increased.

Framing, in which a radiologist's judgment is strongly influenced by how a case is presented.

Group pressure or conformity, which is due to a person's basic need to conform to a group.

Dunning-Kruger effect, a cognitive bias in which the unskilled suffer from illusory superiority mistakenly rating their ability much higher than average, while the highly skilled tend to underrate their own abilities. This bias is attributed to an inability of the unskilled to recognize their mistakes.

Illusion of competence, overconfidence bias, and illusion of confidence: "Our intuitive inclination is to assume that one's confidence is a measure of one's competence. Therefore an 'unskilled' radiologist who wants to express confidence is not effortlessly searching for other possible alternatives in an emergency setting, nor consulting a colleague in another hospital," Jager and colleagues wrote.